System Overview

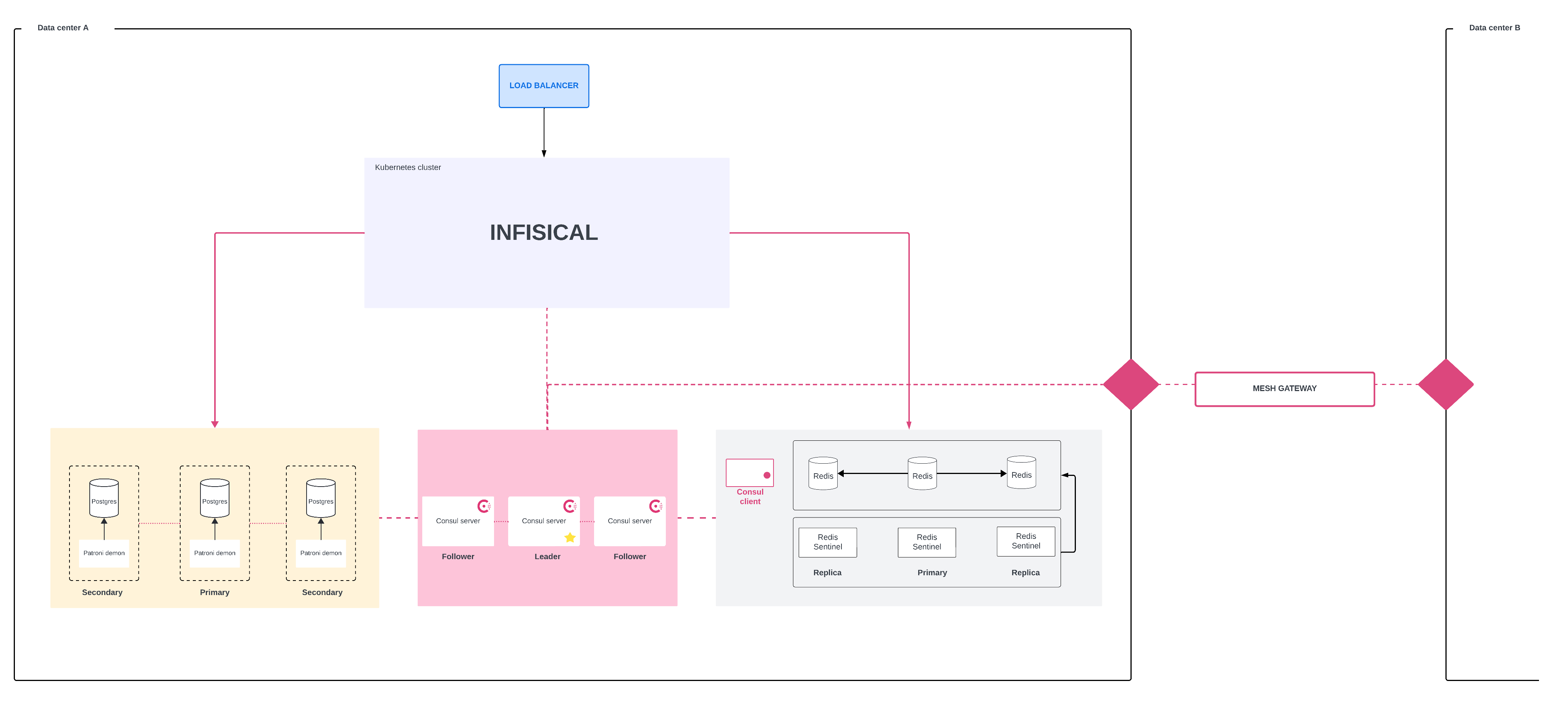

The architecture above utilizes a combination of Kubernetes for orchestrating stateless components and virtual machines (VMs) or bare metal for stateful components.

The infrastructure spans multiple data centers for redundancy and load distribution, enhancing availability and disaster recovery capabilities.

You may duplicate the architecture in multiple data centers and join them via Consul to increase availability. This way, if one data center is out of order, active data centers will take over workloads.

The architecture above utilizes a combination of Kubernetes for orchestrating stateless components and virtual machines (VMs) or bare metal for stateful components.

The infrastructure spans multiple data centers for redundancy and load distribution, enhancing availability and disaster recovery capabilities.

You may duplicate the architecture in multiple data centers and join them via Consul to increase availability. This way, if one data center is out of order, active data centers will take over workloads.

Stateful vs stateless workloads

To reduce the challenges of managing state within Kubernetes, including storage provisioning, persistent volume management, and intricate data backup and recovery processes, we strongly recommend deploying stateful components on Virtual Machines (VMs) or bare metal. As depicted in the architecture, Infisical is intentionally deployed on Kubernetes to leverage its strengths in managing stateless applications. Being stateless, Infisical fully benefits from Kubernetes’ features like horizontal scaling, self-healing, and rolling updates and rollbacks.Core Components

Kubernetes Cluster

Infisical is deployed on a Kubernetes cluster, which allows for container management, auto-scaling, and self-healing capabilities. A load balancer sits in front of the Kubernetes cluster, directing traffic and ensuring even load distribution across the application nodes. This is the entry point where all other services will interact with Infisical.Consul as the Networking Backbone

Consul is an critical component in the reference architecture, serving as a unified service networking layer that links and controls services across different environments and data centers. It functions as the common communication channel between data centers for stateless applications on Kubernetes and stateful services such as databases on dedicated VMs or bare metal.Postgres with Patroni

The database layer is powered by Postgres, with Patroni providing automated management to create a high availability setup. Patroni leverages Consul for several critical operations:- Redundancy: By managing a cluster of one primary and multiple secondary Postgres nodes, the architecture ensures redundancy. The primary node handles all the write operations, and secondary nodes handle read operations and are prepared to step up in case of primary failure.

- Failover and Service Discovery: Consul is integrated with Patroni for service discovery and health checks. When Patroni detects that the primary node is unhealthy, it uses Consul to elect a new primary node from the secondaries, thereby ensuring that the database service remains available.

- Data Center Awareness: Patroni configured with Consul is aware of the multi-data center setup and can handle failover across data centers if necessary, which further enhances the system’s availability.

Redis with Redis Sentinel

For caching and message brokering:- Redis is deployed with a primary-replica setup.

- Redis Sentinel monitors the Redis nodes, providing automatic failover and service discovery.

- Write operations go to the primary node, and replicas serve read operations, ensuring data integrity and availability.